1. Document history

| Date | Author | Changes |

|---|---|---|

2023-03-31 |

dh |

Add configuration of NixOS 22.11 in a virtual machine |

2023-03-05 |

dh |

Add remote IP logging in the Apache web server to log original IPv4 addresses in the IPv6-only web servers |

2022-12-31 |

dh |

Cover Ubuntu 22.04, add KVM host installation script with automation of all base steps, remove references to Ubuntu 16.04, proofread of the whole book |

2022-02-19 |

dh |

Add section on a Java setting which may cause problems, some other minor changes |

2021-05-19 |

dh |

Add DNS64 access control description on the physical host, some minor changes |

2021-03-21 |

dh |

Add firewall chapter with Shorewall and sshguard and cover systemd-networkd delay problem of virtual machines |

2020-08-09 |

dh |

Some updates on integration with web site and github, license added, preface rewritten |

2020-08-09 |

dh |

Initial version |

2. Preface

This is a book about setting up a Linux server and a number of services on it. It describes a so-called "virtualized setup". That is, the physical machine on which everything runs is separated into a number of "virtual machines" which run completely independent instances of operating systems. The virtual machines are held together by the operating system on the physical host - sometimes referenced as "hypervisor".

This setup is not totally unusual. In fact, these days it’s the normal way of working with many-core-multi-gigabyte systems available for purchase or rent.

There is, however, still a remarkable shortage of descriptions how to setup such a system based on IPv6 as primary protocol. Even though the classic IPv4 address pool is drought for several years now, setups continue to describe how to work with one public IPv4 address for the host system and a network of private IPv4 addresses for the virtual machines.

The setup described here works genuinely with IPv6 as communication protocol. The most important advantage is that all virtual machines can be accessed by official IP addresses directly without any further ado. Of course, this only works if the client also has IPv6 connectivity which is finally the case for more and more systems these days.

We also show how IPv6 and IPv4 get interconnected in this setup: How can an IPv6-only virtual machine access an IPv4-only server? How can IPv4-only clients access services on the IPv6-only virtual machines? Are there services which definitely need an IPv4 address on a virtual host (spoiler: yes) and how do we attach them?

Setting up the physical host is a rather complex process.

This book is accompaigned by the bash script install-kvm-host.sh which performs all the basic steps on a Hetzner Online root server with Ubuntu 22.04.

If you execute it, you can start installing virtual machines immediately afterwards.

2.1. License

This guide is published under the Creative Commons Attribution-ShareAlike 4.0 International license.

You are free to:

-

Share — copy and redistribute the material in any medium or format

-

Adapt — remix, transform, and build upon the material

for any purpose, even commercially.

Under the following terms:

-

Attribution — You must give appropriate credit, provide a link to the license, and indicate if changes were made. You may do so in any reasonable manner, but not in any way that suggests the licensor endorses you or your use.

-

ShareAlike — If you remix, transform, or build upon the material, you must distribute your contributions under the same license as the original.

Read more on https://creativecommons.org/licenses/by-sa/4.0/

install-kvm-host.sh is free software:

you can redistribute it and/or modify it under the terms of the GNU General Public License as published by

the Free Software Foundation, either version 3 of the License, or

(at your option) any later version.

2.2. Distribution

This guide is an Asciidoc document. It is hosted on Github and can be read online (or downloaded) as HTML or PDF document:

- HTML version

- PDF version

- Asciidoc sources

install-kvm-host.sh is distributed together with the Asciidoc sources in the github repository.

2.3. About the author

Born in 1972, Dirk Hillbrecht started working with computers in 1986, when his school offered a programming course using Turbo Pascal 3.0 on Apple II computers. In the 1990s he studied "Mathematics with focus on Computer Science" in Hannover. He administrated networks of Sun and Silicon Graphics machines at the university and witnessed the raise of Linux almost from its beginnings. Since 2000, he writes application software for fleet management and carsharing in Java. He still lives in Hannover, Germany.

2.4. About this guide

After having administrated Unix and Linux systems since the 1990s, I’ve rented my first real root server for myself only in 2016. While that machine worked and served things quite reliably, I felt it aging pretty fast. It was an Ubuntu 16.04 system, it had only 4 GB of RAM and a quite outdated Athlon64 processor.

Due to these parameters it was not possible to run virtualized setups - which I wanted to do to seperate services. E.g. that machine had a complete e-mail server setup which I intended to use for my private e-mail but still hesitated to activate as it was rather complex and "manually setup". I followed an instruction guide on the internet which even its author said is outdated only two years later.

There were other shortcomings like a less-than-optimal Let’s-encrypt setup. Let’s encrypt started almost at the same time as my server installation and there have been quite some optimisations since then.

All in all, my setup was aging, it was not sufficient for much more stuff on it and in the meantime, you got far more capable hardware for not so much more money.

So, in 2018 I decided to start "from scratch" with the system design. The first and most important design decision was about IP connectivity. IPv4 had run out of address space since more than five years then. IPv6 has been available for a decade or so. I wanted to do it in a modern way:

-

I assume to have only one IPv4 address but a sufficiently large IPv6 network routed to the rented server.

-

I build the system in a way that all "real" services run in virtual machines managed by Linux' KVM system.

-

The physical host gets the IPv4 address.

-

All virtual machines get only IPv6 addresses. No offical IPv4, not even a dual stack with private IPv4 and network-address translation (NAT). Only IPv6.

-

Virtual machines can access IPv4-only services in the internet through a NAT64/DNS64 gateway on the host.

-

Services on the virtual machines are only generally available from IPv6 addresses.

-

To serve incoming IPv4 requests, application proxys on the physical host forward traffic to the actual service handlers on a virtual machine if needed.

-

If for any reason a service on a virtual machine absolutely needs its own direct IPv4 connectivity, it is added "on top" of the setup.

Implementing this scheme initially took me about a week. I wrote all steps down and published a series of blog articles in my personal blog. As systems evolve and interest remained, I continued to update the articles. Eventually, I came to the conclusion that this scheme of updating was not flexible enough. So, I decided to rewrite the articles (and some unpublished articles with further explanations) into an Asciidoc document and publish it on github. As usual, this project became a bit bigger as I expected and after integrating and updating all information I suddenly had a PDF document of 80 pages - which, as you see, has still grown since then.

When reworking this guide for Ubuntu 22.04 in December 2022, I decided to build an automated process for the basic steps of setting up the physical host. The result is install-kvm-host.sh which is now distributed together with this guide. This surely makes my life easier as I do not have to perform all the steps in this guide manually (and therefore prone to errors). But it should also help everyone who wants to setup a server this way.

Have fun reading this guide! I hope it helps you setting up your own, modern, IPv6-first setup.

|

About IP addresses

IP addresses in this guide are made up and sometimes scrambled like 1.2.X.Y or 1234:5:X:Y::abcd. X and Y actually have to be numbers, of course…

|

The physical host

The first thing to start a virtualized server setup is to prepare the physical host. Sometimes it’s also called "hypervisor" or "domain zero". It all means the same: It is the actual hardware ("bare metal") with an operating system on it which hosts all the virtual machines to come. I’ll stick with the term "physical host" in this document when I reference this installation.

The operating system of the physical host can be totally different from the operating systems on the virtual machines. It can be a specialized system like VMWare’s ESXi or an extremly shrunken Linux or BSD system. The setup described here, however, is based on a stock Ubuntu installation for the physical host. It is a very broadly used system with tons of descriptions for different configuration. Chances are good that you find solutions for specific problems of your installation "in the internet" rather easily.

The physical host has to cope with the specific network infrastructure it is installed in. Depending on this external setup, configuration can be quite different between installations.

3. Physical hosts with one /64 network

IPv6 standards define that every host with IPv6 connectivity must have at least one /64 network assigned. Such an environment is the German hosting provider Hetzner Online: They only route one /64 network to each host. That disallows any routed setup between the physical host and the virtual machines. We’ll use the only network we have to access the physical host and all virtual machines. The default gateway for the physical host will be the link-local address pointing to Hetzner’s infrastructure. The default gateway for the virtual machines will be the physical host.

|

Why Hetzner Online?

This document wants to be an independent guide for setting up IPv6-first setups. However, my main information and research source are the servers I administrate myself. They are located at the German hoster Hetzner Online, so my knowledge and experience comes mainly from their environment. Hopefully, in the future other environments are added to this guide to make it less centric about one special provider. |

3.1. Initial setup of the host system at Hetzner’s

If you rent the Hetzner server, order it with the "rescue system" booted.

That gives the most control over how the system is configured.

I suggest that you access the server in this early stage by its IP address only.

We’ll change the IPv6 address of the system later in the install process.

If you want to have a DNS entry, use something interim to throw away later, e.g. <plannedname>-install.example.org.

As suggested above, I obtained my server in Hetzner’s "rescue system" which allows the OS installation through the installimage script.

I wanted to work as much as possible with default components and configurations, so I decided for the Ubuntu 22.04 [1] install image offered by Hetzner.

|

Stay with default setups as much as possible

I strongly advise you to always stick with the offered setups from your hosting provider as much as possible.

It increases your chance for support and your chances are much higher to find documentation if you run into problems.

|

Logging into the new server gives you a welcoming login screen somehow like this:

dh@workstation:~$ ssh -l root 2a01:4f8:1:3::2

The authenticity of host '2a01:4f8:1:3::2 (2a01:4f8:1:3::2)' can't be established.

ECDSA key fingerprint is SHA256:dbSkzn0MlzoJXr8yeEuR0pNp9FEH4mNsfKgectkTedk.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '2a01:4f8:1:3::2' (ECDSA) to the list of known hosts.

-------------------------------------------------------------------

Welcome to the Hetzner Rescue System.

This Rescue System is based on Debian 8.0 (jessie) with a newer

kernel. You can install software as in a normal system.

To install a new operating system from one of our prebuilt

images, run 'installimage' and follow the instructions.

More information at http://wiki.hetzner.de

-------------------------------------------------------------------

Hardware data:

CPU1: AMD Athlon(tm) 64 X2 Dual Core Processor 5600+ (Cores 2)

Memory: 3704 MB

Disk /dev/sda: 750 GB (=> 698 GiB)

Disk /dev/sdb: 750 GB (=> 698 GiB)

Total capacity 1397 GiB with 2 Disks

Network data:

eth0 LINK: yes

MAC: 00:24:21:21:ac:99

IP: 241.61.86.241

IPv6: 2a01:4f8:1:3::2/64-/77777777777

RealTek RTL-8169 Gigabit Ethernet driver

root@rescue ~ #

You might want to write down MAC address and IP addresses of the system.

Note, however, that they are also included in the delivery e-mail sent by Hetzner Online when the server is ready. You also see the addresses in the Hetzner Online server configuration interface or can request them with the ip -a command in the rescue system.

|

SSH keys for Hetzner rescue images

If you put your ssh public key into your Hetzner account and select it in the order process for the machine, it will not only be put into the rescue system but also into the root account of the freshly installed machine.

If you work this way, you never have to enter any passwords during the installation process. You can also select it each time you request a rescue system.

|

The system has two harddisks. I use them as a software RAID 1 as offered by the install script. This allows for at least some desaster recovery in case of a disk failure. And for systems like this, I do not install any partitions at all (apart from the Hetzner-suggested swap and /boot partition). The KVM disks will go to qcow2 files which are just put into the host’s file system. Modern file systems fortunately do not have any problems with 200+ GB files and this way, all the virtual guest harddisks are also covered by RAID.

Hetzner's installimage asks in a dialog for the image to use. This guide is applicable for the Ubuntu images, preferrably the 22.04 version, but 20.04 works also. 18.04 would be ok, too, but this version will be outdated in April 2023 [2] - don't use it for new installations any more.

The image installation process is controlled by a configuration file. Its (striped-down) version for the system I work on reads like this:

## HARD DISK DRIVE(S): # Onboard: SAMSUNG HD753LJ DRIVE1 /dev/sda # Onboard: SAMSUNG HD753LJ DRIVE2 /dev/sdb ## SOFTWARE RAID: ## activate software RAID? < 0 | 1 > SWRAID 1 ## Choose the level for the software RAID < 0 | 1 | 10 > SWRAIDLEVEL 1 ## BOOTLOADER: BOOTLOADER grub ## HOSTNAME: HOSTNAME whatever (change this one to your system name, not with domain name) ## PARTITIONS / FILESYSTEMS: (keep the defaults) PART swap swap 4G PART /boot ext3 512M PART / ext4 all ## OPERATING SYSTEM IMAGE: (you have selected this earlier in installimage) IMAGE /root/.oldroot/nfs/install/../images/Ubuntu-1804-bionic-64-minimal.tar.gz

|

Installing the server deletes all data previously on it!

Just to be sure: If you use installimage (or similar installation routines from other providers) on an existing system, all data will be deleted on that system.

If unsure, check twice that you are on the right system.

A mistake at this point may be impossible to correct afterwards!

|

Hetzner Online GmbH - installimage

Your server will be installed now, this will take some minutes

You can abort at any time with CTRL+C ...

: Reading configuration done

: Loading image file variables done

: Loading ubuntu specific functions done

1/16 : Deleting partitions done

2/16 : Test partition size done

3/16 : Creating partitions and /etc/fstab done

4/16 : Creating software RAID level 1 done

5/16 : Formatting partitions

: formatting /dev/md/0 with swap done

: formatting /dev/md/1 with ext3 done

: formatting /dev/md/2 with ext4 done

6/16 : Mounting partitions done

7/16 : Sync time via ntp done

: Importing public key for image validation done

8/16 : Validating image before starting extraction done

9/16 : Extracting image (local) done

10/16 : Setting up network config done

11/16 : Executing additional commands

: Setting hostname done

: Generating new SSH keys done

: Generating mdadm config done

: Generating ramdisk done

: Generating ntp config done

12/16 : Setting up miscellaneous files done

13/16 : Configuring authentication

: Fetching SSH keys done

: Disabling root password done

: Disabling SSH root login without password done

: Copying SSH keys done

14/16 : Installing bootloader grub done

15/16 : Running some ubuntu specific functions done

16/16 : Clearing log files done

INSTALLATION COMPLETE

You can now reboot and log in to your new system with

the same password as you logged in to the rescue system.

root@rescue ~ # reboot

Installing the system this way brings a fresh and rather small Ubuntu system on the disk. Note that ssh will complain massively about the changed host key of the system, but that is ok. You’re now booting the installed system which has another host key than the rescue system you used before.

dh@workstation:~$ ssh -l root 2a01:4f8:1:3::2 @@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@ @ WARNING: REMOTE HOST IDENTIFICATION HAS CHANGED! @ @@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@ IT IS POSSIBLE THAT SOMEONE IS DOING SOMETHING NASTY! [...] Offending ECDSA key in /home/dh/.ssh/known_hosts remove with: ssh-keygen -f "/home/dh/.ssh/known_hosts" -R "2a01:4f8:1:3::2" ECDSA host key for 2a01:4f8:1:3::2 has changed and you have requested strict checking. Host key verification failed. dh@workstation:~$ ssh-keygen -f "/home/dh/.ssh/known_hosts" -R "2a01:4f8:1:3::2" # Host 2a01:4f8:1:3::2 found /home/dh/.ssh/known_hosts updated. dh@workstation:~$ ssh -l root 2a01:4f8:1:3::2 The authenticity of host '2a01:4f8:1:3::2 (2a01:4f8:1:3::2)' can't be established. ECDSA key fingerprint is SHA256:z2+iz/3RRC3j6GT8AtAHJYnZvP9kdzw8fW8Aw5GPl0q. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added '2a01:4f8:1:3::2' (ECDSA) to the list of known hosts. Welcome to Ubuntu 18.04.1 LTS (GNU/Linux 4.15.0-38-generic x86_64) [...] root@merlin ~ #

After having booted into it, I had some hours of remarkably degraded performance as the RAID 1 had to initialize the disk duplication completely. Be aware of this, your server will become faster once this is over.

Use cat /proc/mdstat to see what’s going on on your harddisks.

root@merlin ~ # cat /proc/mdstat

Personalities : [linear] [multipath] [raid0] [raid1] [raid6] [raid5] [raid4] [raid10]

md0 : active raid1 sdb1[1] sda1[0]

4190208 blocks super 1.2 [2/2] [UU]

md2 : active raid1 sdb3[1] sda3[0]

727722816 blocks super 1.2 [2/2] [UU]

[>....................] resync = 4.0% (29775168/727722816) finish=147.8min speed=78670K/sec

bitmap: 6/6 pages [24KB], 65536KB chunk

md1 : active raid1 sdb2[1] sda2[0]

523712 blocks super 1.2 [2/2] [UU]

unused devices: <none>

If you install an e-mail server (or have some external mail service you want to use for system e-mails), you should enable alarming messages if the RAID degrades due to diskfailure. A RAID only protects against hardware failures if actually failed hardware is replaced quick enough.

|

Test the rescue system

This is a good moment to test whether Hetzner’s rescue mechanism works. Sometimes, the servers are not correctly configured in the BIOS and do not load the rescue system even if this is requested in the interface:

If something is wrong here, contact support and let them solve the problem. If you make mistakes in the host’s network configuration, you will need the rescue mode to sort things out. |

3.2. Continue using the install-kvm-host.sh script

This guide features the script install-kvm-host.sh which performs all steps following in this section on a Hetzner root server with a freshly installed Ubuntu 22.04. You may now fast forward to the description of install-kvm-host.sh to learn how to use it.

If any problems arise, you can go back to the following chapters to sort things out as the script really only performs the actions described here in the next sections.

If you want to do things on your own, if you work with another installation than Ubuntu 22.04 or if you are not on a Hetzner root server, read on to perform the setup steps manually.

3.3. Check preconditions on the server

You should check that the system supports virtualisation at all. Issue

egrep -c '(vmx|svm)' /proc/cpuinfo

and verify that the result is greater then 0. Then, apply

apt install cpu-checker kvm-ok

and check that the result is

INFO: /dev/kvm exists KVM acceleration can be used

If not, the BIOS settings of the system must be corrected. Contact the hosting provider to sort that out.

3.4. Put /tmp into a ramdisk

One thing which is totally independent from IPv6 and KVM is the /tmp directory.

It contains temporary files.

I like to put it into a ramdisk.

Add one line to /etc/fstab and replace /tmp with the following commands:

echo "none /tmp tmpfs size=2g 0 0" >> /etc/fstab && \ mv /tmp /oldtmp && mkdir /tmp && mount /tmp && rm -rf /oldtmp

This setup allows /tmp to grow up to 2 GB which is ok if the system has more than, say, 30 GB of memory.

You can, of course, allow more or less. Note that the memory is only occupied if /tmp really stores that much data.

An empty /tmp does not block any memory!

The mkdir creates /tmp without any special access rights.

Fortunately, declaring the file system to be tmpfs in /etc/fstab above makes the access rights 1777 (or rwxrwxrwt) - which is exactly what we need for /tmp.

You should reboot the system after this change.

Chances are that wiping /tmp this way confuses processes.

|

On the reboots

You will read "reboot the system" often during this guide.

This is not a joke!

We configure very basic system and network settings here and it is crucial that these settings are correct if the system starts up!

Check this step by step by rebooting and fix any problems before continuing.

Otherwise, your server will be unreliable - and that's a bad thing!

|

3.5. Adjust the time zone

One tiny piece in the puzzle is the timezone of the just-installed machine. At least the Hetzner Online installation scheme leaves the server with UTC as timezone. If you want to have it in the local timezone, change it via

timedatectl set-timezone <timezonename>

You get the available timezones with timedatectl list-timezone. For Germany, the command is timedatectl set-timezone "Europe/Berlin".

3.6. Preparing the network settings of the host

We do now have a freshly installed system. Unfortunately, it is not quite ready to serve as a KVM host. For this, we first have to configure a network bridge on the system.

I must say that I felt rather uncomfortable with Hetzner’s IPv6 approach in the beginning. Having only one /64 IPv6 network disallows a routed setup. Due to the way how IPv6 SLAAC address recovery works, you cannot split this network sensibly into smaller ones. I really suggest reading Why Allocating a /64 is Not Wasteful and Necessary and especially The Logic of Bad IPv6 Address Management to find out how the semantic of the IPv6 address space differs from IPv4. If you have a hoster who gives you a ::/56 or even ::/48 network, you can surely manage your addresses differently. Most probably, you will go with a routed setup.

However, since my start on the IPv6 road, I learned that Hetzner’s approach is not that wrong.

They use the link local fe80:: address range for gateway definitions and this a totally valid approach.

We have to use what we get. First, enable IPv6 forwarding globally by issuing

sysctl -w net.ipv6.conf.all.forwarding=1

Also enable this setting in /etc/sysctl.conf to make it permanent.

Now use ip a to get device name and MAC address of the physical network card of the system:

root@merlin ~ # ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp2s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:24:21:21:ac:99 brd ff:ff:ff:ff:ff:ff

inet 241.61.86.241/32 scope global enp2s0

valid_lft forever preferred_lft forever

inet6 2a01:4f8:1:3::2/64 scope global

valid_lft forever preferred_lft forever

inet6 fe80::224:21ff:fe21:ac99/64 scope link

valid_lft forever preferred_lft forever

Your network device’s name may differ.

It can be something like enpXsY as in this example or enoX.

On all modern Linux distributions, it will begin with en, however…

Here the common track for all systems ends. In the Linux world, multiple network configuration setups have evolved over time. The most common ones are:

-

Direct setup in configuration files in

/etc/network. This is old-school networking setup, especially when combined with a System-V-initialisation process. I do not cover this here but you find a plethora of installation guides in the internet for this. -

Systemd-based configuration with files in

/etc/systemd/network. This is how many modern distributions handle system start and network setup these days. Ubuntu did it until 17.04, Hetzner’s Ubuntu did it longer. I cover this two sections further. -

Netplan with a configuration in

/etc/netplan. This kind of "meta-configuration" is used by Ubuntu since 17.10 and by Hetzner since November 2018 for 18.04 and 18.10. I describe the needed changes in the following section.

3.6.1. Ubuntu 18.04 and later with Netplan

Ubuntu 18.04 and the later versions comes with Netplan to configure the network. Since about November 2018, Hetzner uses this setup in their install process. Note that earlier Ubuntu installations are provided with systemd-networkd-based setup described below.

Netplan uses configuration files with YAML syntax.

In most cases, there is only one file: /etc/netplan/01-netcfg.yaml.

For freshly installed Hetzner servers with Ubuntu 22.04, it looks somehow like this:

root@merlin /etc/netplan # cat 01-netcfg.yaml

### Hetzner Online GmbH installimage

network:

version: 2

renderer: networkd

ethernets:

enp2s0:

addresses:

- 241.61.86.241/32

- 2a01:4f8:1:3::2/64

routes:

- on-link: true

to: 0.0.0.0/0

via: 241.61.86.225

- to: default

via: fe80::1

nameservers:

addresses:

- 185.12.64.2

- 2a01:4ff:ff00::add:1

- 185.12.64.1

- 2a01:4ff:ff00::add:2

What you do now is:

-

Define a bridge device

br0; -

Assign all settings of the physical ethernet device to that bridge;

-

Bind the bridge to that device;

-

Pinpoint its MAC address to the one of the physical device, otherwise traffic will not be routed;

-

Disable all network configuration on the physical device.

After these changes, the Netplan configuration from above looks like this:

root@merlin ~ # cat /etc/netplan/01-netcfg.yaml

### Hetzner Online GmbH installimage

network:

version: 2

renderer: networkd

ethernets:

enp2s0:

dhcp4: false

dhcp6: false

bridges:

br0:

accept-ra: false

macaddress: 00:24:21:21:ac:99

interfaces:

- enp2s0

addresses:

- 241.61.86.241/32

- 2a01:4f8:1:3::2/64

routes:

- on-link: true

to: 0.0.0.0/0

via: 241.61.86.225

- to: default

via: fe80::1

nameservers:

addresses:

- 185.12.64.2

- 2a01:4ff:ff00::add:1

- 185.12.64.1

- 2a01:4ff:ff00::add:2

Note that you also disable any IPv6 auto-configuration on the br0 device by adding accept-ra: false into its configuration.

We'll setup the routing advertisement daemon lateron for the virtual machines, but it should not interact with the physical host.

Netplan has the very nice capability to apply a new configuration to a running system and roll it back if something goes wrong.

Just type netplan try.

If the countdown counts down (some stalled seconds at the beginning are allowed), just hit Enter and make the change permanent.

Otherwise, wait for two minutes and Netplan will restore the old configuration so that you should be able to login again and fix the problem without further ado.

I suggest to finish this on success with a complete reboot to be really sure that the new configuration is applied on system startup.

After a reboot, the network device list should look like this:

root@merlin ~ # ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp2s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel master br0 state UP group default qlen 1000

link/ether 00:24:21:21:ac:99 brd ff:ff:ff:ff:ff:ff

3: br0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 00:24:21:21:ac:99 brd ff:ff:ff:ff:ff:ff

inet 241.61.86.241/32 scope global br0

valid_lft forever preferred_lft forever

inet6 2a01:4f8:1:3::2/64 scope global

valid_lft forever preferred_lft forever

inet6 fe80::224:21ff:fe21:ac99/64 scope link

valid_lft forever preferred_lft forever

Note that the physical device enp2s0 and the bridge br0 have the same MAC address.

This is intentional!

You should test now that you can login to the system through both IPv6 and IPv4 protocol, use ssh -6 <hostname> and ssh -4 <hostname> to enforce the IP protocol version.

3.6.2. Ubuntu 20.04 with gateway6 directive

Note that Ubuntu 20.04 (and earlier) did not define the IPv6 default route with a standard routes definition, but used a specific gateway6 key:

network:

[...]

ethernets:

[...]

routes:

- on-link: true

to: 0.0.0.0/0

via: 241.61.86.225

gateway6: fe80::1

Move this definition as it is into the br0 section:

network:

[...]

bridges:

br0:

[...]

routes:

- on-link: true

to: 0.0.0.0/0

via: 241.61.86.225

gateway6: fe80::1

3.6.3. Ubuntu 18.04 and other systems with systemd-networkd

|

This section is not updated any more

This section is not updated any more. Actually, Ubuntu gave up on direct systemd configuration.

|

Until October 2018, Hetzner used a systemd-networkd-based setup on Ubuntu, even with 18.04. If you have such a system, you get the same result in a different way. Creating a bridge for virtual machines using systemd-networkd explains the basics nicely.

With this system, go to /etc/systemd/network and define a bridge device in file 19-br0.netdev:

[NetDev] Name=br0 Kind=bridge MACAddress=<MAC address of the physical network card of the host> [Bridge] STP=true

It is extremly important to define the MAC address, or Hetzner will not route traffic to the system. STP seems not mandatory, does not hurt either. I kept it in.

Then, assign the bridge to the physical device in 20-br0-bind.network:

[Match] Name=eno1 [Network] Bridge=br0

Now copy the original file created by Hetzner (here: 10-eno1.network) to 21-br0-conf.network and replace the matching name from the physical device to the bridge.

In fact, you only replace the eno1 (or whatever you network device’s name is) with br0.

You also add IPv6AcceptRA=no to prevent the physical host’s network being influenced from the SLAAC messages of radvd which is installed later:

[Match] Name=br0 [Network] Address=<IPv6 address assigned by Hetzner, do not change> Gateway=fe80::1 // This is always the IPv6 gateway in Hetzner's network setup Gateway=<IPv4 gateway assigned by Hetzner, do not change> IPv6AcceptRA=no [Address] Address=<IPv4 address of the system assigned by Hetzner, do not change> Peer=<IPv4 peer assigned by Hetzner, do not change>

Rename the original file 10-eno1.network to something not detected by systemd, e.g. 10-eno1.networkNO.

Keep it around in case something goes wrong.

After these changes, the physical device has not any networks attached. This is important so that the bridge can grab it on initialization. Let’s see whether everything works and reboot the system.

If something goes wrong: Boot into rescue system, mount partition, rename 10-eno1.networkNO back into original name ending in .network.

Reboot again.

Investigate. Repeat until it works…

3.7. Ensure correct source MAC address

Our virtual machines will have their own MAC addresses. Otherwise, the IPv6 auto configuration would not work. Unfortunately, these MAC addresses will also leak through the bridge into Hetzner's network and that might lead to trouble as the provider does only accept the actual assigned MAC address of the main server as valid.

To prevent such problems perform MAC address rewriting using the ebtables command.

You might need to install it using apt install ebtables first.

Then use:

ebtables -t nat -A POSTROUTING -j snat --to-src <MAC address of the physical network card of the host>

I've added this to /etc/rc.local.

On a default installation of Ubuntu 22.04 (or 20.04 - or 18.04), this file does not exist.

If you create it, make it look like this:

#!/bin/bash # force source MAC address of all packets to the official address of the physical server ebtables -t nat -A POSTROUTING -j snat --to-src 00:24:21:21:ac:99 exit 0

Replace the address in the example with your actual physical MAC address!

Also, make the file executable with chmod +x /etc/rc.local.

"The internet" claims that you need to add other files to systemd for /etc/rc.local being evaluated in Ubuntu.

At least for me this was not needed, it "just worked".

Check whether the rule has been added:

root@merlin ~ # ebtables -t nat -L Bridge table: nat Bridge chain: PREROUTING, entries: 0, policy: ACCEPT Bridge chain: OUTPUT, entries: 0, policy: ACCEPT Bridge chain: POSTROUTING, entries: 1, policy: ACCEPT -j snat --to-src 00:24:21:21:ac:99 --snat-target ACCEPT root@merlin ~ #

Reboot the systems once more to check if the rule survives a reboot.

3.8. Change IPv6 address

This step is not performed by install-kvm-host.sh.

|

You might think about changing the IPv6 address of the physical host.

Hetzner Online configures them always having 0:0:0:2 as IPv6 address host part.

While there is nothing wrong with that, giving the host a random address makes the whole installation a bit less vulnerable to brute-force attacks.

Fortunately, changing the address is really simple.

In the Netplan-based setup, it is in /etc/netplan/01-netcfg.yaml. Look for the addresses of the br0 device:

network:

[...]

bridges:

br0:

[...]

addresses:

- 2a01:4f8:1:3::2/64

Change it’s host part (the lower 64 bits) to more or less whatever you like

- 2a01:4f8:1:3:6745:a24b:cc39:9d1/64

If you work with systemd-networkd, the network configuration is in /etc/systemd/network/21-br0-conf.network if you followed this guide:

[Network] Address=2a01:4f8:1:3::2/64

Change it to

[Network] Address=2a01:4f8:1:3:6745:a24b:cc39:9d1/64

You can also add and not replace the additional address. Then, your server can be accessed through both addresses. While it is absolutely no problem to have multiple IPv6 addresses on the same device, it can make configuration of services more difficult as the correct address for outgoing messages has to be selected correctly. I would suggest not to do this. Stay with one IPv6 address.

Use netplan try or systemctl restart systemd-networkd to apply the new settings.

Note that if you are connected via IPv6, your connection will be interrupted and you have to reconnect.

If you are connected via IPv4 (e.g. by ssh <IPv4-address> or ssh -4 <hostname>), your connection should survive.

systemd-networkd, however, might need several seconds to sort everything out.

If everything works, add a reboot. In theory, restarting the network configuration should be sufficient, but at least back in the days of Ubuntu 18.04 and earlier my system sometimes behaved strangely after this change.

ssh to the new address should now work. If it doesn’t and your are locked out, again use the rescue system to sort it out.

3.9. Add DNS entries

Now is the time to add the physical host to the DNS:

-

Add an

AAAArecord in the domain the system should be reachable in. -

Add a

PTRrecord in the hoster’s reversal IP entries. If there is already an entry for the former address, you can remove it by simply wiping out the server name and pressing "Enter". -

While you’re at it, also add the

Arecord and thePTRrecord for the IPv4 address of the Host.

|

Keep DNS time-to-live short!

I strongly suggest that you set the TTL for all DNS entries as short as possible during the setup, something between 2 and 5 minutes.

If you make a mistake and you have a TTL of multiple hours or even a day, you may have serious issues with the name service as long as the TTL of the wrong entries is not invalid everywhere.

|

|

The rescue system IP address

If you ever have to reboot your server into Hetzner’s rescue system, keep in mind that it will get its original IPv6 address ending in ::2.

You will not be able to access it through its DNS name.

You might want to add a DNS entry for <servername>-rescue.example.org for such cases.

Of course, you have to remember that, too…

|

At this stage, lean back for a moment! The difficult part is done. You have the network setup of your KVM host up and running. The rest is much easier and will not potentially kill network access to system. Also, the stuff coming now is much less provider-specific. While the initial network setup might work considerably different with another hosting provider, chances are good that the following steps are the same regardless of where you have placed your host.

4. Setup the KVM environment

The physical host is now up and running. To actually host virtual machines with IPv6-first connectivity, some more services need to be installed.

4.1. NAT64 with Tayga

As I wrote, our virtual machines shall have IPv6-only internet access. That implies that they cannot access systems which are IPv4-only. Unfortunately, even in 2022 there are quite popular sites like github.com which do not have any IPv6 connectivity at all. To make such systems accessible from the guest systems, we setup a NAT64 service which performs a network address translation for exactly this case.

I decided to go with the "Tayga" server. It’s scope is limited to exactly perform NAT64. This makes it necessary to add further services to make all this really useable but it also minimizes configuration complexity.

4.1.1. How Tayga works (in this setup)

Tayga acts as a network address translator.

It receives incoming IPv6 packets targetted at an address in the 64:ff9b::/96 network.

It takes the least 32 bits of this address and takes them as IPv4 target address.

I.e. the IPv4 address 1.2.3.4 will be represented by the IPv6 address 64:ff9b::102:304.

|

Representation of IPv4 address parts in IPv6 addresses

IPv4 uses decimal numbers to represent address parts, IPv6 uses hexadecimal representation.

An IPv4 address like It is allowed to write the lowest 32 bits of an IPv6 address in the usual IPv6 address syntax,

so the address can also be written as I refrain from this syntax in this guide. |

Additionally, Tayga takes the IPv6 source address and maps it onto a private IPv4 address, e.g. one out of 192.168.255.0/24.

With these two IPv4 addresses it constructs an IPv4 packet with the content of the received IPv6 packet and puts it into the IPv4 network stack of the physical host.

Now, Linux takes over. It uses its standard source NAT mechanisms to map the IPv4 packet with the private source address onto the public IPv4 address of the machine and sends it out. When the answer package arrives, it converts the target address back onto the private IPv4 address the packet emerged from and forwards it to the Tayga process.

Tayga can rebuild the IPv6 origin of the communication from the private IPv4 target address of the answer packet.

It also can derive the correct 64:ff9b::/96 source address from the IPv4 source address of the packet.

With these two addresses it builds an IPv6 packet with the same content as the received IPv4 packet and sends it to the actual IPv6 communication partner.

The whole scheme looks like this:

4.1.2. Installing Tayga on the physical host

Start by installing the tayga service by the usual apt install tayga.

In /etc/tayga.conf, enable the disabled ipv6-addr directive as this is needed for working with the well-known prefix. Set the IPv6 address to something random in your IPv6 subnet:

ipv6-addr 2a01:4f8:1:3:135d:6:4b27:5f

Additionally, switch the prefix directive from the activated 2001… one to the 64:ff9b::/96 one:

# prefix 2001:db8:1:ffff::/96 prefix 64:ff9b::/96

The whole Tayga configuration reads like this afterwards:

# Minimum working NAT64 Tayga configuration for KVM host with IPv6-only guests # (A) Basic setup # Device name, this is the default tun-device nat64 # Data dir for stateful NAT information data-dir /var/spool/tayga # (B) IPv6 setup # The "well-known" prefix for NAT64 prefix 64:ff9b::/96 # IPv6 address, from the official ::/64 network ipv6-addr 2a01:4f8:X:Y:14a5:69be:7e23:89 # (C) IPv4 setup # Pool of dynamic addresses dynamic-pool 192.168.255.0/24 # IPv4 address, not to be used otherwise in the network ipv4-addr 192.168.255.1

Test the new setup by starting tayga once in foreground:

systemctl stop tayga <-- Disable if already started tayga -d --nodetach

This should give something like this:

starting TAYGA 0.9.2 Using tun device nat64 with MTU 1500 TAYGA's IPv4 address: 192.168.255.1 TAYGA's IPv6 address: 2a01:4f8:1:3:135d:6:4b27:5f NAT64 prefix: 64:ff9b::/96 Note: traffic between IPv6 hosts and private IPv4 addresses (i.e. to/from 64:ff9b::10.0.0.0/104, 64:ff9b::192.168.0.0/112, etc) will be dropped. Use a translation prefix within your organization's IPv6 address space instead of 64:ff9b::/96 if you need your IPv6 hosts to communicate with private IPv4 addresses. Dynamic pool: 192.168.255.0/24

Stop the manually started instance with Ctrl-C.

|

Enable the service explicitly on Ubuntu 18.04 and earlier

On Ubuntu 18.04 (and earlier), you have to explicitly enable the service. Edit Change in /etc/default/tayga

# Change this to "yes" to enable tayga RUN="yes" |

Launch the service with systemctl start tayga. After that, systemctl status tayga should report the Active state active (running); the log lines in the status output should end with

... systemd[1]: Started LSB: userspace NAT64.

|

Forgot to enable the service on Ubuntu 18.04 and earlier?

If the Active state is active (exited) and the protocol says something about set RUN to yes, you have forgotten to enable the RUN option in /etc/default/tayga. Correct it as described above and issue systemctl stop tayga and systemctl start tayga.

|

4.1.3. Tayga and firewalls

As described above, Tayga uses the Linux network stack for the IPv4 source NAT step.

For this, it adds a routing rule into the kernel.

You can see it using e.g. iptables:

# iptables -t nat -L [...] Chain POSTROUTING (policy ACCEPT) target prot opt source destination [...] MASQUERADE all -- 192.168.255.0/24 anywhere

It is important that this rule exists, otherwise NAT64 will not work! Keep this in mind especially if you install a firewall on the physical host. If that firewall overwrites the complete routing rules set, it will also drop this rule and render Tayga unfunctional. We cover below how to integrate Tayga with the Shorewall firewall.

4.2. DNS64 with bind

In the last chapter, we have assumed that the IPv6-only system maps IPv4-only targets on a makeshift IPv6 address. The question remains how it is tricked into doing this. We solve this problem now.

4.2.1. The concept of DNS64

NAT64 is usually used together with a "DNS64" name server. This is a specially configured name server. If a client asks it for an IPv6 name resolution, i.e. an AAAA name service record, and there is only an IPv4 A record for the requested name, the DNS64 name server "mocks up" an AAAA record munging the IPv4 address and a "well-known prefix" to a synthetical IPv6 address. This address - surprise, surprise - points directly to a nicely prepared NAT64 server so that the IPv6 system talks to an IPv4 system transparently hidden behind the NAT64 proxy.

4.2.2. Installing bind with DNS64

We setup the DNS64 server using a classic bind DNS server. Modern versions include DNS64, it only has to be activated. Start the install with the usual apt install bind9.

Our bind is a forwarding-only server only for our own virtual machines. On Debian-derived systems, the bind options needed for this setup are located in /etc/bind/named.conf.options. Edit that file and enter the following entries:

options {

directory "/var/cache/bind";

forwarders {

2a01:4f8:0:1::add:1010; # Hetzner name servers

2a01:4f8:0:1::add:9999;

2a01:4f8:0:1::add:9898;

};

dnssec-validation auto;

auth-nxdomain no; # conform to RFC1035

listen-on {};

listen-on-v6 {

<IPv6 network assigned by provider>::/64;

};

allow-query { localnets; };

dns64 64:ff9b::/96 {

clients { any; };

};

};

The actual important definition is the dns64 section at the bottom of the options definitions. It enables the DNS64 mode of bind and defines the IPv6 address range into which the addresses should be converted.

It also important to define listen-on {}; to disable listening on the IPv4 port altogether - we do not need it. Restricting allow-query to the localnets is also important to prevent the server from becoming an open DNS relay. We only need it for our internal network.

The forwarders section defines the name servers this bind will ask if it does not know the answer itself - which is almost always the case. I put Hetzner’s server names here. Of course, you must either use the DNS of your hoster or provider or a free and open server like Google’s public DNS at 2001:4860:4860::8888 and 2001:4860:4860::8844.

|

Check the networks twice

Check the network in listen-on-v6 and also check the forwarders. You whole IP address resolution will not work if one of these is wrong.

|

Restart the daemon and check that it is enabled and running:

systemctl restart bind9 systemctl status bind9

After these steps, you have a working DNS64 server which you can use for all you virtual machines on the system. You can test that it really answers with DNS64-changed entries by querying something which does not have an IPv6 address:

root@physical:~# host github.com # Query using external default DNS server github.com has address 140.82.118.3 github.com mail is handled by [...] root@physical:~# host github.com 2a01:4f8:1:2:3:4:5:6 # Give IPv6 address of local server [...] github.com has address 140.82.118.3 github.com has IPv6 address 64:ff9b::8c52:7603 github.com mail is handled by [...]

Note how the DNS server running on the physical host returns the additional IPv6 address with 64:ff9b prefix. To be sure that the local server is really addressed, give its IPv6 address as additional parameter to the host command as shown above.

|

Using an external DNS64 server

So far, the name server is only used for DNS64. You can also use the Google servers 2001:4860:4860::6464 and 2001:4860:4860::64 (yes, these are other servers than the public DNS servers mentioned above) offering this service. Their replies are compatible with our NAT64 setup. However, having an own server reduces external dependencies and allows for additional services lateron.

|

4.2.3. Restricting DNS64 to certain virtual machines

You can restrict DNS64 service to certain of the virtual machines on the host. This might be needed as a machine should explicitly not connect to IPv4 servers or because it has its own IPv4 address and should it to connect to the IPv4 internet instead of NAT64.

DNS64 access restriction is done via bind9’s access control lists. Just define an access control list for the DNS64 service and refer to it in the service configuration:

acl dns64clients {

# address specification

};

options {

[...]

dns64 64:ff9b::/96 {

clients { dns64clients; }; # Refer to the ACL defined above

};

};

There are two ways to specify the servers to allow DNS64 access:

-

You can simply specify the IPv6 addresses of all virtual machines which are allowed to use DNS64:

DNS64 ACL with a positive host listacl dns64clients { 2a01:4f8:1:2:a:bc:345:9; 2a01:4f8:1:2:a:bc:678:e; 2a01:4f8:1:2:a:bc:432:7; [...] };You might work with net definitions (e.g.

2a01:4f8:1:2:a:bc::/96;), but normally it does not really make any sense. The IPv6 addresses of your virtual machines will be derived from the MAC addresses of their (virtual) network cards and those are assigned randomly when the virtual machine is created. So, just stick with the actual, full IP adresses here. -

You can also define the control list the other way around and specify those virtual hosts which should not use DNS64:

DNS64 ACL with a negative host listacl dns64clients { !2a01:4f8:1:2:a:bc:567:d; !2a01:4f8:1:2:a:bc:901:3; !2a01:4f8:1:2:a:bc:864:b; [...] any; # Grant access for all others! };This option is better if DNS64 is the norm in your setup and you only want to exclude a small number of specific servers.

Note that the final entry in your list must be

any;if you work with negative host specifications - otherwise, no DNS64 service is granted for anyone!

4.3. Router advertisement with radvd

With NAT64 and DNS64 in place, we’re almost ready to serve virtual machines on the host. The last missing bit is the network configuration.

Of course, you could configure your virtual hosts' network manually. However, IPv6 offers very nice auto-configuration mechanisms - and they are not difficult to install. The key component is the "router advertisement daemon". It’s more or less the IPv6-version of the notorious DHCP service used in IPv4 setups to centralize the IP address management.

For this service, we use the radvd router advertisement daemon on the bridge device so that our virtual machines get their network setup automatically by reading IPv6 router advertisements.

Install radvd and also radvdump for testing through the usual Debian/Ubuntu apt install radvd radvdump.

Then, create the configuration file /etc/radvd.conf. It should contain the following definitions:

interface br0 {

AdvSendAdvert on;

AdvManagedFlag off;

AdvOtherConfigFlag off;

AdvDefaultPreference high;

prefix <IPv6 network assigned by provider>::/64 {

AdvOnLink on;

AdvAutonomous on;

AdvRouterAddr on;

AdvValidLifetime infinity;

};

RDNSS <IPv6 address of the physical host> {};

route 64:ff9b::/96 {

AdvRouteLifetime infinity;

};

};

The route section advertises that this system routes the 64:ff9b:: network. Only with this definition the virtual servers know where to send the packets for the emulated IPv6 addresses for the IPv4-only servers to.

IPv6 route advertisement is prepared for dynamically changing routes. In our setup, however, all routes are static. Therefore, prefix and route advertisements are announced with "infinite" lifetime.

|

Use Googles DNS64 servers

If you opted for the Google DNS64 servers to do the job, write instead RDNSS 2001:4860:4860::6464 2001:4860:4860::64 {

AdvRouteLifetime infinity;

};

This announcement can also have inifinite lifetime. Even if Google changed their server addresses, the definition here stays static. |

A radvd configuration must always be read as advertisement of the machine serving it.

So, you do not write something like "service X is on machine Y" but "This machine offers X".

Having this in mind, the configuration advertises all three network settings needed by the virtual machines:

-

The

prefixsection defines that this host announces itself as router (AdvRouterAddr) to the given network and allows the machines to use SLAAC for generating their own IPv6 address (AdvAutonomous). -

The RDNSS section declares this machine to be the DNS resolver for the virtual machines.

-

The

routesection adds the static route for NAT64 IP addresses to this machine.

Start radvd and make it a permanent service (coming up automatically after reboot) using

systemctl start radvd systemctl enable radvd

If you start radvdump soon after starting radvd, you will see the announcements sent by radvd in irregular intervals. It should contain the network router, the DNS server and the NAT64 route. Note that radvd turns to rather long intervals between the advertisements after some time if noone is listening.

|

Spurious auto-configured routes on br0

After inet6 2a01:4f8:1:2345:abc:4680:1:22/64 scope global dynamic mngtmpaddr noprefixroute

valid_lft 85234sec preferred_lft 14943sec

your bridge is responding to the network announcements. Go back to the network configuration above and add inet6 2a01:4f8:1:2345:abc:4680:1:22/64 scope global

valid_lft forever preferred_lft forever

|

If you ever change the configuration, restart radvd and check its output with radvdump. It should contain both the DNS server and the NAT64 route.

|

The nasty Hetzner pitfall

In their own documentation, Hetzner also describes how to setup radvd. For the DNS servers, however, they use IPv6 example addresses from the 2001:db8 realm. It took me three days and severe doubts on Hetzner’s IPv6 setup to find out, that my only mistake was to copy these wrong IP addresses for the DNS server into the configuration. Don’t make the same mistake…

|

You have now prepared everything for the IPv6-only virtual machines to come: They get their network configuration through the centrally administrated radvd. The advertised setup includes a name server with DNS64 an a NAT64 route to access IPv4-only systems.

|

About non-virtual network setups

So far, this document describes how to setup a root server with virtual machines. Especially NAT64/DNS64 is completely independent of that. If you administrate a (real) computer network and want to lay ground for IPv6-only machines in that, do exactly the same with your physical machines: Install Tayga and the DNS64-capable Bind9 on router behind which the IPv6-only systems reside. This might be the "firewall" of classical setups. Then, your actual computers play the role of the virtual machines in this guide.

|

5. Install KVM and finish the setup

We’re now ready for the final steps! Our network is configured far enough so that we really can start installing virtual machines on our system. For this, we of course need KVM.

5.1. Install libvirtd and its environment

For Ubuntu, I followed the first steps of this guide. On Ubuntu 22.04 the installation command is

apt install bridge-utils libguestfs-tools libosinfo-bin libvirt-clients libvirt-daemon-system libvirt-daemon virtinst qemu qemu-system-x86

On Ubuntu 20.04 or 18.04 the list of packages is slightly different

apt install bridge-utils libguestfs-tools libosinfo-bin libvirt-daemon libvirt-daemon-system qemu-kvm qemu-system virtinst virt-top

apt install bridge-utils libvirt-bin qemu qemu-kvm

This will install a rather large number of new packages on your host. Finally, it will be capable to serve virtual machines.

5.2. Load the virtual network module

Next step is to load the vhost_net module into the kernel and make it available permanently. This increases the efficiency of networking for the virtual machines as more work can be done in kernel context and data can be copied less often within the system. Issue two commands:

modprobe vhost_net echo "vhost_net" >> /etc/modules

The libvirtd daemon should already be up and running at this point. If this is for any reason not the case, start and enable it with the usual systemctl commands or whatever the init system of your host server requires to do this.

|

Do NOT install dnsmasq on Ubuntu 20.04

If you look into the start messages with Ubuntu 22.04 does not seem to have this quirk. Note that even if you do not install dnsmasq, you will have a |

5.3. Create a non-root user on the system.

To simplify installation and administration of your virtual machines, you should add a "normal" user to the system and allow that user to administrate the virtual machines.

-

Create the user with

adduser --disabled-password --gecos "<user name>" <login>. -

Add the user to the

libvirtgroup withusermod -a -G libvirt <login>.. -

Put your ssh public key into

.ssh/authorized_keysof the user.

You should perform a final reboot after these steps to be sure that everything works together correctly and comes up again after a reboot.

Well, that’s it! Our system can get its first virtual machine!

6. install-kvm-host.sh: Install the physical host automatically

As mentioned before, you can perform most parts of the installation of the physical host by the install-kvm-host.sh script which is included in the source code archive of this guide.

6.1. The general process

Currently, install-kvm-host.sh can only be used if

-

the target system is a Hetzner root server and

-

it has a freshly installed Ubuntu 22.04.

In this case, generally perform the following steps:

-

Install the server with Hetzner’s

installimagescript as described in the Initial setup of the host system at Hetzner’s section. -

Download the script from the github repository of the IPv6 First Guide (or clone the repo).

-

Copy it somewhere below root’s home directory on the target server.

-

Execute the script as root on the target server.

-

Add the DNS entries for the physical host.

The script will perform all the steps described in the previous sections. The steps are grouped into three stages:

-

Prepare general environment and the networking settings of the physical host.

-

Install and configure Tayga, bind, and radvd.

-

Install libvirtd and finish the setup.

In normal operation mode, install-kvm-host.sh will reboot the server between these stages to ensure a stable, predictable configuration.

On the system I developed and tested the script, the stages needed the following time:

| Stage | Duration |

|---|---|

1 |

~3,5 minutes |

2 |

~1 minute |

3 |

~5 minutes |

The main part of the time is actually spent downloading the needed packages. The configuring steps only need some seconds in each stage.

My server also needed up to 7 minutes for each reboot. The reason for these delays is unknown. Hopefully this is special to that hardware.

As the system reboots in between, the connection to the console is lost. The script logs all console output also to /var/log/install-kvm.log so that one knows what actually happened and whether any errors occurred.

Currently, discovering the end of the script’s operation is done by monitoring the server. If it has rebooted three times, the script has finished. If it has been successful, there is neither a /var/lib/install-kvm/config nor a /var/lib/install-kvm/stage file. In any case, /var/log/install-kvm.log contains the output of all (executed) script stages and ends either in a success message or with an error message describing what went wrong.

6.2. Operation modes

install-kvm-host.sh has three operation modes:

- normal interactive

-

After evaluating the command line parameters, a summary is shown. The script only continues after the user presses Return.

- no reboot

-

The script performs as in normal interactive mode but does not reboot automatically after each step. It’s up to the user to do so and call the script manually after each reboot again. This allows to control the script’s operation in each stage on the command line.

The parameter

-nrb,--no-rebootor--norebootinvokes the no reboot mode. - batch

-

The script does not wait for the user pressing Return but immediately starts the operation if the parameters are valid. This can be used in automated scenarios, e.g. execution by an Ansible scriptbook.

The parameter

-bor--batchinvokes the batch mode.

6.3. Optional operations

Currently, install-kvm-host.sh knows about two optional operations:

-

Setting the timezone: If a

-tzor--timezoneparameter is given (e.g.-tz "Europe/Berlin", the script sets the time zone of the physical host in the first stage using thetimezonectlcommand of systemd. Hetzner’sinstallimageinitializes the time zone always as UTC so it could make sense to correct this. -

Creating an additional user: If a

-lor--loginparameter is given (e.g.-l dh), An additional user is created at the end of the installation process. This user is added to thelibvirtgroup and gets root’s list of authorized ssh keys for login. If a-nor--nameparameter is given (e.g.--name "Dirk Hillbrecht"), it will be used as the name of the user (otherwise the name is set to the login).

6.4. How it works

install-kvm-host.sh is a lengthy Bash script. It has some nice features which are described a bit more in depth here

6.4.1. Continuation over a reboot

To continue operation after a reboot, the script registers itself with the atd daemon and sets the execution time to "now". To prevent atd from executing the script before the reboot actually happens, install-kvm-host.sh simply suspends atd before registration. The whole process goes like this:

myself=$(readlink $0)

systemctl stop atd

echo "$myself" | at now 2>/dev/null

( sleep 5 ; reboot )&

exit 0On startup, atd is launched again, finds the command which is scheduled in the past (now at the moment of insertion) and executes it. install-kvm-host.sh finds the stage to continue with in /var/lib/install-kvm/stage.

An important point is to carry the command line parameters over the reboot, too. This is done by writing the parameters as export statements into /var/lib/install-kvm/config and source this file at the beginning of all follow-up stages:

if [ "$currentstage" = "1" ]

then

while [ -n "$1" ] ; do

case "$1" in

-l|--login) shift ; export login="$1" ;;

esac

shift

done

rm -f $conffile && touch $conffile

echo "export login=\"$login\"" >> $conffile

else

. $conffile

fi6.4.2. Obtaining network parameters

install-kvm-host.sh calls ip to read several network settings.

However, it uses then JSON mode ip -j to get the information as a structured JSON document and parses it with the command line tool jq:

# returns the name of the network interface which has the public route

publicInterface() {

ip -j route | jq '.[] | select(.dst=="default") | .dev' | sed 's/"//g'

}For YAML files, there is a similar tool yq:

# Read the DNS forwarders from the Netplan configuration

getForwarders() {

cat /etc/netplan/01-netcfg.yaml | \

yq -o y '.[] | .bridges.br0.nameservers.addresses')"

}Unfortunately, yq is not available as an Ubuntu/Debian .deb package in the distribution so far, so install-kvm-host.sh installs it directly from the yq distribution archive. As yq is written in Go, it is distributed as a self-contained binary.

6.4.3. man-page generation

install-kvm-host.sh contains its own man-page as an Asciidoc document. It can be generated by calling the script with the --asciidoc parameter. An Asciidoc processor creates the man page with its output. The --help parameter just calls man $(basename $0) or, if the man page has not been generated, directly emits the result of $0 --asciidoc:

[ "x$1" = "x--asciidoc" ] && { cat <<EOA

= `basename $0`(1)

== NAME

`basename $0` - Transforms a Hetzner root server into a KVM physical host with IPv6-first approach for the virtual machines.

[...]

EOA

exit 0 ;

}

[ "x$1" = "x-h" -o "x$1" = "x--help" ] && { man `basename $0` || $0 --asciidoc | less ; exit 0 ; }6.4.4. Output to log file

install-kvm-host.sh uses tee to pass the standard output not only to the console, but also to /var/log/install-kvm.log. This is applied on the highest level function call so that all output of the executing functions is appropriately passed to all receivers.

performStage "$currentstage" | tee -a $logfileThe virtual machines

7. Overview on virtual machines

At this moment, we have a fully operational IPv6 autoconfiguration environment. Every modern operating system will configure itself and have full IPv6 connectivity.

Additionally, through the NAT64/DNS64 address translation layer, all the IPv6-only virtual machines will be able to access IPv4-only servers seamlessly and also without any additional configuration requirements.

7.1. Initializing a virtual machine

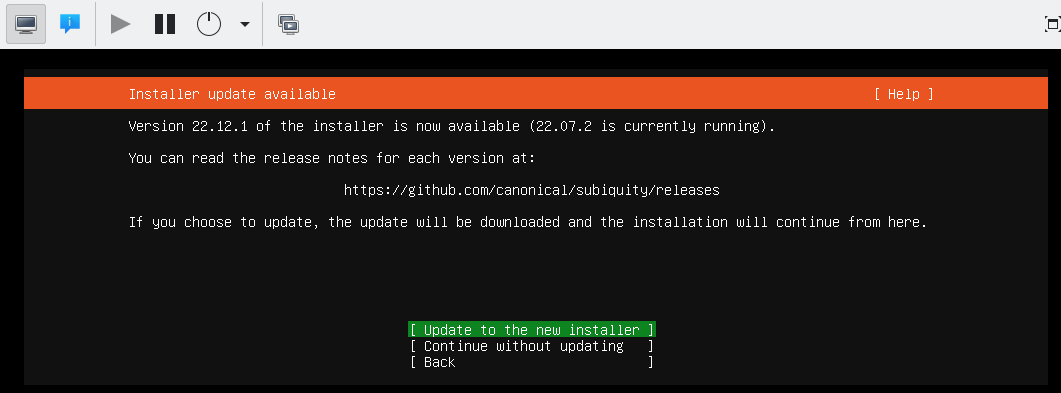

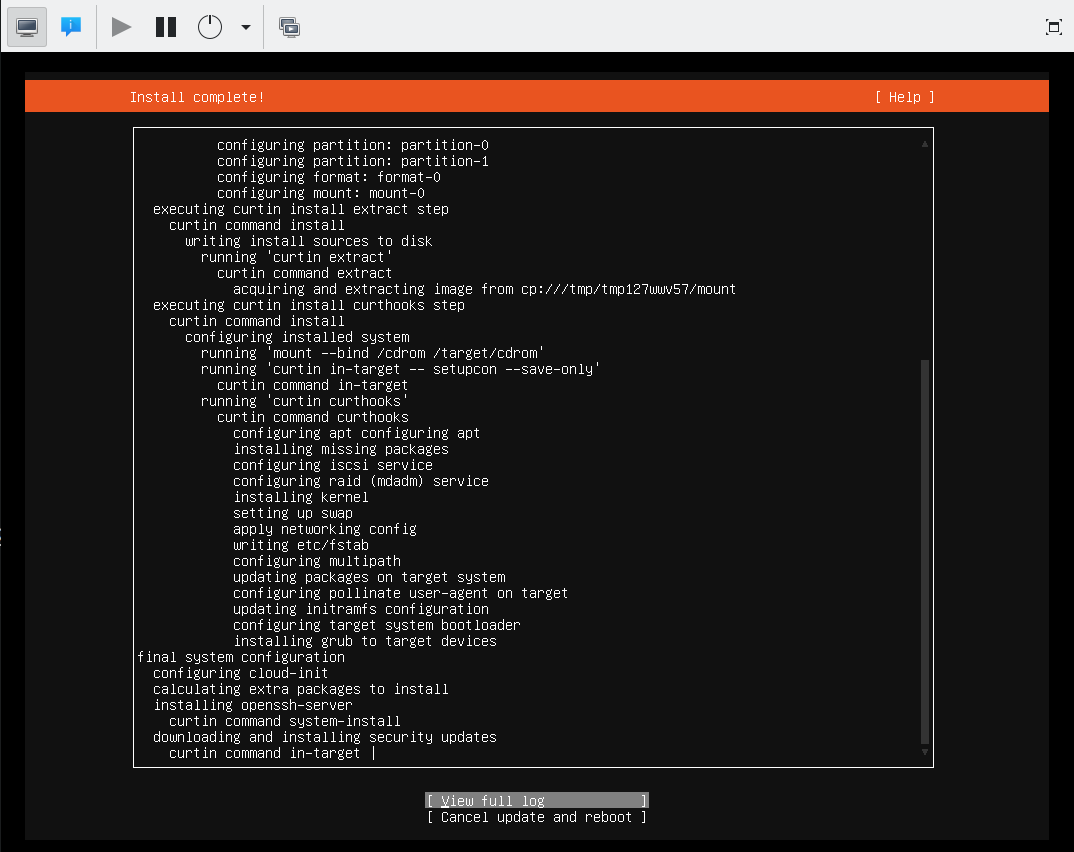

Installing a virtual machine might sound difficult to you if you have never done this before. In fact, it is not. After all, it is even simpler than the remote installation on a hosted system once you get used to it. On the physical machine, you are dependent on what the hosting provider offers as installation procedures. KVM offers you more or less a complete virtualized graphical console which allows to act just as you were sitting in front the (virtual) computer’s monitor. This way, you can install whatever you like.

Furthermore, if you make a configuration mistake on the physical host, you might end with a broken machine. If this happens in a virtual machine, you have several ways to solve the problem: You can connect to the console and log in directly without network access. If the machine does not boot any more, you can even mount the virtual hard disk into the physical machine and try to fix. And if the machine is for any reason broken beyond repair, you can just throw it away and start over with a fresh installation.

I suggest to start with a throw-away virtual machine. It will not contain any "real" services but only show any remaining problems in the setup. Furthermore, it allows to test and learn the whole process of installing a virtual machine in the setup:

-

Copy the installation ISO image for the system to install onto the host.

-

Connect to the KVM system using

virt-manager. Of course, you might also use another client, but I find this rather easy. -

Use the ssh connection of the normal user created on the host.

-

Start the host creation.

From hereon, things become rather standard. We’re now in the process of installing a guest system on a KVM host. My best practices are these:

-

Assign as many CPUs to the virtual machine as the hardware has. Only if you suspect the virtual machine to grab too much resources, reduce the CPU number.

-

Use cow2 image file for the virtual harddisk. It is the most flexible way once it comes to migrating the virtual machine and today’s file systems can cope with the double indirection quite well.

-

Give the virtual machine definition the same name the system will later have.

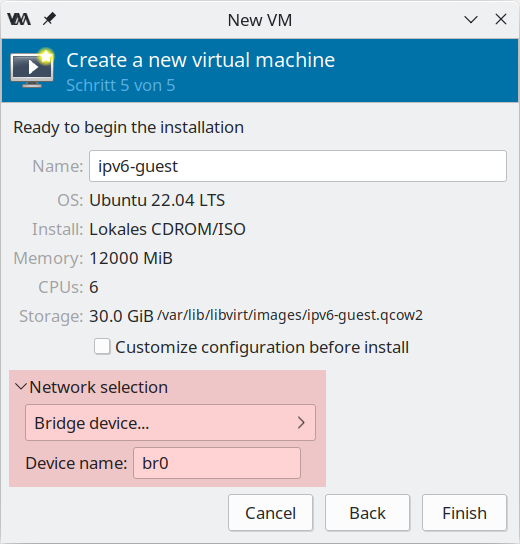

The one interesting point is the network configuration at the very end of the definition process. Here, enter the "Network selection" before creating the machine. Select "Bridge device" as network type and give the name of bridge which is br0 in our setup:

|

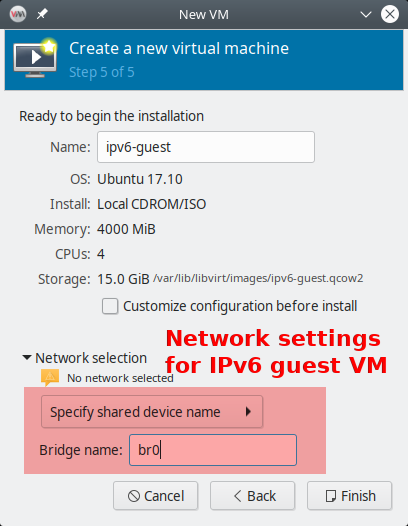

Network dialog in older versions of virt-manager

virt-manager versions included in Ubuntu before 22.04 organized the network selection dialog slightly different. You just select "Name of common device" and give the bridge’s name there.

Figure 4. Select the network device in older virt-manager

|

If you are ready, press "Create" and summon your first virtual system.

7.2. DNS and auto-boot

To work with the long IPv6 addresses conveniently, DNS is almost mandatory. You should enter the virtual machine now into the domain.

-

Find the virtual machine’s IP address. On Linux systems,

ip adoes the job. You may also derive it manually from the virtual machine’s MAC address (as SLAAC uses it, too). -

Create an entry in the DNS zone of the system. Note that you only enter a

AAAArecord, not anArecord for an IPv4 address. The system just has no IPv4 address… -

In my opinion, it makes also sense to always create a reverse IP entry for IPv6 hosts. If for any reason your DNS AAAA entry vanishes, you still have the reverse IP entry which assigns name and IP address. Reverse IP entries are always managed in the DNS realm of the IP network owner. In my case, they are edited in Hetzner’s robot interface.

The SLAAC mechanism derives the IP address from the MAC address of the virtual machine. So, it will be static even though it has nowhere been configured explicitly.

If you want your virtual machine to be started automatically if the physical host starts up, you have to set the corresponding flag in the KVM configuration. In virt-manager, you find it in the "Boot options" for the virtual machine.

7.3. Adding an IPv4 address to the virtual machine

The first advice for this topic: Don’t do it!

Your virtual machine is fully connected to the internet any can be reached from anywhere and for any service. The only restriction is that a connecting party must use IPv6. Most systems, however, are connected by IPv6 these days.

Even if the virtual machine hosts a service which must also be accessible by IPv4-only clients, a direct IPv4 connection for the virtual machine is not mandatory. Especially for HTTP-based services, we will configure a reverse proxying scheme on the physical host which allows transparent connections to IPv6-only web servers on the virtual machines for IPv4-only clients. So, only configure a direct IPv4 connection into a virtual machine if it runs a service which requires it.

In the provider-based scenarios, the provider has to assign an additional IPv4 address to the physical host. Then, the physical host must be configured in a way that it passes the IPv4 address to the virtual machine. Finally, the operating system on the virtual machine must handle these connections. Do like this:

Obtain an additional IPv4 address for your server from your hoster. If you already have a network of them assigned to your server, you can use one of those, of course.

|

Provider-dependent setup

The actual setup depends on the network scheme of your provider. Hetzner Online implements a direct peer-to-peer routing of IPv4 addresses. We continue based on this setup.

|

7.3.1. The physical host

If the physical host is configured with Netplan, add the route to the virtual guest explicitly:

root@merlin ~ # cat /etc/netplan/01-netcfg.yaml

network:

[...]

bridges:

br0:

[...]

addresses:

- 241.61.86.241/32

- 2a01:4f8:1:3::2/64

routes:

[...]

- to: 241.61.86.104/32 # <-- IPv4 address of the virtual machine

via: 241.61.86.241 # <-- IPv4 address of the host

[...]

After adding these entries, use netplan try to test the changes. If the configuration still works (countdown may stall for 4 to 5 seconds), press Enter and make the changes permanent.

|

More information needed

There is not that much information about Netplan-based network configuration stuff in the internet, unfortunately. Let me know if this configuration did not work for you.

|

On a physical host with systemd-networkd setup, add an entry to the bridge device configuration. In the description above, this is the file /etc/systemd/network/21-br0-conf.network. Add the following lines:

[Address] Address=<IPv4 address of the host> Peer=<IPv4 address for the virtual machine>

There are two [Address] entries now in this file which both have the same Address but different Peer entries. That’s intentional.

After having changed the configuration, reload the service via systemctl restart systemd-networkd.

7.3.2. The virtual machine

On the virtual machine, add the IPv4 address to the Netplan configuration, usually in /etc/netplan/01-netcfg.yaml. It reads completely like this:

network:

version: 2

renderer: networkd

ethernets:

ens3:

dhcp6: yes

addresses: [ IPv4 address for the virtual machine/32 ]

routes:

- to: 0.0.0.0/0

via: IPv4 address OF THE PHYSICAL HOST

on-link: true

|

The physical host is the IPv4 default route!

Note that - at least in the Hetzner Online network - it is crucial that you declare the physical host as the default route for IPv4 traffic from the virtual machines! If you set the gateway given by Hetzner, traffic is not routed. In this case, you can reach the guest from the host but not from anywhere else via IPv4.

|

On the virtual machine, you can apply your changes with netplan try and pressing Enter, too. You can check the IPv4 routes after that which should only show one entry:

# ip -4 r default via <IPv4 address of physical host> dev ens3 proto static onlink

|

Information on systemd-networkd-based setups missing

I have not configured systemd-networkd-based virtual machines so far, so I do not know how to set them up correctly. But it should be easy as only a static address and gateway entry is needed.

|

Depending on the installation routine of the operating system, there could be another thing to change. Check whether your /etc/hosts contains a line

127.0.1.1 <name of virtual machine>

This might have been added during installation as your system had no IPv4 connectivity at all at that stage. Now that you have full IPv4 connectivity, this can be misleading to some systems. Exchange it with

<IPv4 address of virtual machine> <name of virtual machine> <name of virtual machine>.<domain of virtual machine>

e.g.

1.2.3.4 virthost virthost.example.org

Finally, add DNS and reverse DNS entries for the IPv4 address for the virtual machine. Now, it is directly accessible by both IPv4 and IPv6 connections.

7.3.3. Name service on IPv4-enhanced virtual machines.

If you have not defined DNS64 access control lists in the bind9 configuration on the physical host, an IPv4-enhanced virtual machine will still connect to IPv4-only servers via IPv6! The reason is the DNS64-enhanced name server. It will deliver IPv6 addresses for such servers and the outgoing connection will be established through the NAT64 gateway.

Normally, this has no drawbacks. We install the IPv4 connectivity only for clients which need to connect to a service on the virtual machine via IPv4 - and this is the case with the configuration described above. Remember that the whole NAT64/DNS64 magic happens at the DNS layer. My advice is to generally keep it this way and let the virtual machines use the address translation scheme for IPv4 connections anyway.

There are exceptions to this rule, however. The most remarkable one is if the virtual machine becomes an e-mail server. In this case, it must establish outgoing connections to IPv4 servers via IPv4 or otherwise its connections are blocked. In such a case, exclude the virtual machine in the physical host’s DNS configuration:

acl dns64clients {

!2a01:4f8:1:2:a:bc:579:a; # IPv6 address of virtual machine

# Add more if needed (with prepended "!")

[...]

any; # Grant access for all others

};

options {

[...]

dns64 64:ff9b::/96 {

clients { dns64clients; }; # Refer to the ACL defined above

};

};

If IPv4 enhancement and direct IPv4 access is the norm for your virtual machines, you may also define the access control list the other way around. See the section on DNS64 ACLs.

7.3.4. About IPv4 auto-configuration

This setup assigns IPv4 addresses statically. One could argue that the usual auto configuration setups like DHCP and MAC-address-based IP address assignment should be used. This would, of course, be possible.

I opted against such setups as I don’t think that they are necessary. The setup described here is based on IPv6 and it can be run as IPv6-only setup. For IPv6, we have complete connectivity and auto-configuration - which is even completely static so no configuration entries are needed for individual machines.